It is commonly believed that smaller class sizes are positively related to stronger student performance. While it is not clear what the optimal class size should be or if it varies by the nature of the course material, intuitively, there are several reasons that argue for the general truth of that statement, e.g.,

- Each student gets more attention from the instructor.

- The pace of the class can more easily be moderated to accommodate fewer students.

- In a more intimate environment, students are likely to be less intimidated and more likely to ask questions/share perspectives.

We looked for evidence from our data to support – or not – this relationship. Information from seven programs of study averaged across available data from Fall 2005 through Summer 2009 was reviewed. Though they were not randomly selected, these programs do represent a diversified set of courses.

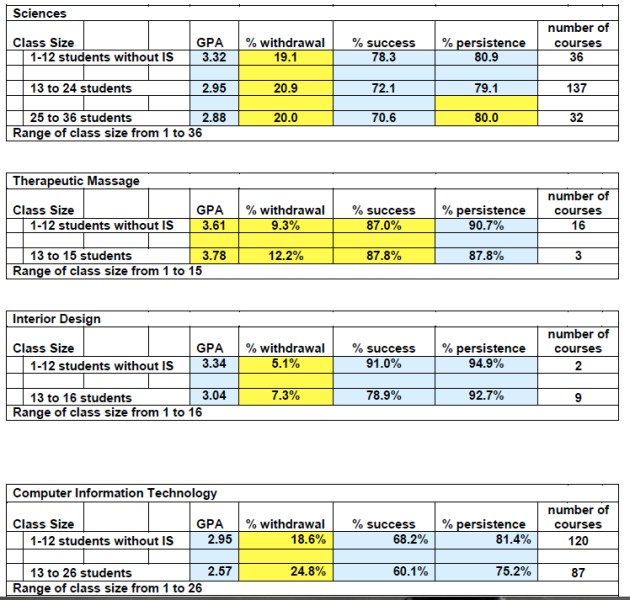

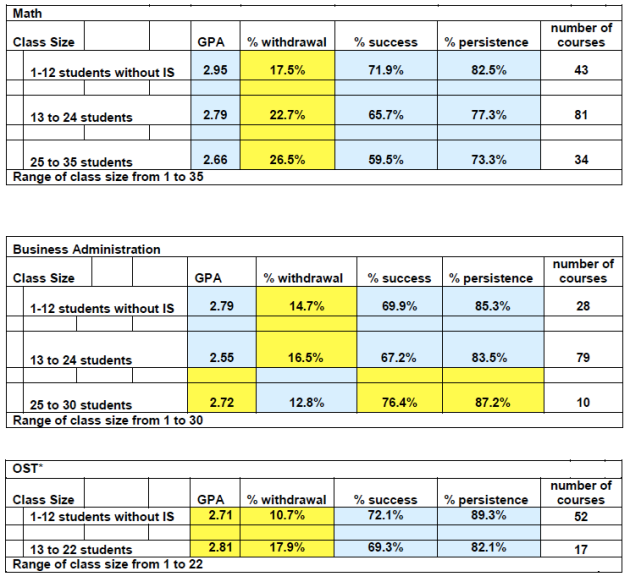

Across programs, no one program had more than 36 students in any one course. For this analysis, the distribution of students was arbitrarily trisected such that segments with up to 12 students are compared to segments of 13 to 24 and 25 to 36 students, as appropriate (i.e., not all programs had 36 students).

Additionally, independent study courses were deleted from the analyses. Independent study courses by definition have a very small number of students with individualized study plans which may bias the more general look at the effect of class size.

Four metrics were calculated for each class size segment within each program:

- Average GPA,

- The percentage withdrawing (student or instructor initiated),

- Percentage success (the proportion of students who received a grade of A, B or C),

- Persistence rate (proportion of students who completed the course regardless of grade).

The number of courses in each class size segment is also noted. All details appear in the tables at the end of this analysis.

The specific hypotheses we are testing with this analysis are these:

- Across programs, smaller classes (those with 12 and fewer students) are associated with higher average GPAs than classes with more than 12 students.

- Across programs, smaller classes (those with 12 and fewer students) are also associated with higher success rates and higher rates of persistence than classes with more than 12 students.

- Across programs, smaller classes (those with 12 and fewer students) are associated with lower rates of withdrawal than classes with more than 12 students.

The following tables are color coded so that the outcomes are apparent. The blue shading indicates values decline as class size increases. The yellow shading indicates values increase as class size increases. (Note: These metrics tend to be intercorrelated.)

Across the aggregate of courses within each of the 7 programs…

- GPAs in smaller classes were highest in 5 of the 7 programs.

- Success rates in smaller classes were highest in 6 of the 7 programs.

- Persistence rates in smaller classes were highest in 7 of the 7 programs.

- Rates of withdrawal are lowest in smaller classes in 7 of the 7 programs.

This is a compelling set of evidence in support of the hypotheses. However, not all of these relationships are perfectly linear when all of the data are reviewed.

- For example, within the Sciences program, persistence rates decline when the smallest class size (12 students or less) is compared to the next class segment 13 to 24), but increase when the class segment of 13 to 24 is compared to the largest segment of 25+.

- All of the metrics calculated for the Business Administration program also show non linear patterns.

It is not clear why the patterns deviate for these two programs. I welcome any discussion around these anomalies; insights are often hidden within them.

Earlier blogs and discussion confirm the belief that persistence and retention are positively related, but it is unclear how strong that relationship is. We can only suggest (as we have before) that increases in persistence will lead to increases in retention.

In this data set, the average increase in persistence when class sizes of 12 are compared to those of 13 to 24 is 3.9 pts. While there is no guarantee that retention would increase 3.9 points with class sizes of 12 or less, a cost-benefit analysis would determine what it would cost to reduce the average class size and what increase in retention rate would justify the expenditure.

Submitted by Cindy Schersching, PhD, January 16, 2010

Key metrics by class size across select programs (Fall 2005 – Summer 2009)

Legend: Blue shading indicates values decline as class size increases. Yellow shading indicates values increase as class size increases.

| *This definition of OST includes BUS 151, CTS 125, CTS130, CS 165 and excludes OST 080 AND 131 per the program head. |